Le machine learning est devenu un outil indispensable depuis quelques années. IL est utilisé dans de nombreux domaines aussi bien dans les industries, les laboratoires de recherche que les entreprises. Comparativement aux techniques deep learning qui est considéré comme une boîte noire, les méthodes de machine learning laisse apparaître une certaine logique physique dans l’interprétation des résultats. En effet, une bonne prédiction avec des algorithmes de machine learning requière un certains nombre étapes logique permettant une réalisation de cause-effet et pouvant permettre une meilleur interprétation. Parmi les étapes, figure une qui est la clé de son interpretabilité : Feature selection. Plusieurs techniques existent afin de trouver les features les plus pertinents pour le phénomène étudie. Dans cet article, nous abordons quelques de ces techniques.

A l’issue de cet tutoriel, vous serez a mesure de:

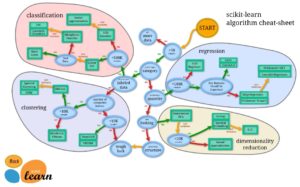

- Programmer la sélection de features avec scikit-learn ,

- Comprendre pourquoi il ne faut jamais se fier à une seule technique,

- Sélectionner les meilleurs features par combinaison de méthodes

Set Directory

import os

import pandas as pd

from numpy import *

import numpy as np

import timeit as tm

os.chdir("D:/Cours_ESI/Evaluation")

from sklearn.linear_model import (LinearRegression, Ridge, Lasso)

from sklearn.feature_selection import RFE, f_regression

import seaborn as sns

from sklearn.preprocessing import MinMaxScaler

from xgboost import XGBRegressor

from sklearn.ensemble import RandomForestRegressor

Problématique

Les données de ce tutoriel sont issues des antennes météo en France. Il s’agira de prédire le taux de grippe en France par région et par semaine. Nous avons au total 19 features et 11484 observations.

Data pre-processing

train=pd.read_csv('_data/train_set.csv',delimiter=',',decimal=',',low_memory=False)

train.drop(["Unnamed: 0"],axis=1,inplace=True)

train=train.astype({"ff":"float64","t":"float64","u":"float64","n":"float64","pression":"float64","precipitation":"float64",

"[0-19 ans]":"float64","[20-39 ans]":"float64","[40-59 ans]":"float64","[60-74 ans]":"float64","[75 ans plus]":"float64",

"Prop H":"float64","Prop F":"float64"})

train=train.rename(columns={"[0-19 ans]":"0_19ans","[20-39 ans]":"20_39ans","[40-59 ans]":"40_59ans","[60-74 ans]":"60_74ans","[75 ans plus]":"75etplus","Prop H":"Prop_h","Prop F":"Prop_f"})

train=train.loc[:,["week","region_code","ff","t","u","n","pression","precipitation","Year","0_19ans","20_39ans","40_59ans","60_74ans",

"75etplus","Prop_h","Prop_f","reqgoo1","reqgoo2","reqgoo3","TauxGrippe"]]

train.shape

(11484, 20)

train.isnull().any()

week False region_code False ff False t False u False n False pression False precipitation False Year False 0_19ans False 20_39ans False 40_59ans False 60_74ans False 75etplus False Prop_h False Prop_f False reqgoo1 False reqgoo2 False reqgoo3 False TauxGrippe False dtype: bool

sns.pairplot(train)

<seaborn.axisgrid.PairGrid at 0x1c15eb53a48>

Definir features and target

delete=["TauxGrippe"] features= train.drop(delete,axis=1) target=train.TauxGrippe

Definir features selection méthode

- p power score

import ppscore as pps

import seaborn as sns

import warnings

warnings.filterwarnings("ignore")

import matplotlib.pyplot as plt

matrix_df = pps.matrix(train)[['x', 'y', 'ppscore']].pivot(columns='x', index='y', values='ppscore')

plt.figure(figsize=(16,12))

sns.heatmap(matrix_df, cmap=pyplot.get_cmap("coolwarm"), annot=True,fmt='.2f')

<matplotlib.axes._subplots.AxesSubplot at 0x1c17b06bb48>

Vous pouvez constatez qu’avec cette technique, un (1) seul feature semble pertinent pour le modèle ie ‘week’. Toutefois, il n’est pas évident que lui seul permet de bien prédire le taux de grippe en France d’autant plus qu’il est difficile de faire une relation de cause-effet avec le taux de grippe. Toutefois, nous sommes en alerte et pouvons dire que le taux de grippe varie en fonction des semaines.

- Correlation coefficient

from matplotlib import pyplot

train.corr(method='kendall').style.format("{:.2}").background_gradient(cmap=pyplot.get_cmap('coolwarm'))

| week | region_code | ff | t | u | n | pression | precipitation | Year | 0_19ans | 20_39ans | 40_59ans | 60_74ans | 75etplus | Prop_h | Prop_f | reqgoo1 | reqgoo2 | reqgoo3 | TauxGrippe | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| week | 1.0 | 0.0 | -0.069 | 0.014 | -0.027 | 0.092 | -0.017 | 0.024 | 0.95 | -0.12 | -0.32 | -0.22 | 0.32 | 0.2 | -0.021 | 0.021 | -0.11 | -0.0019 | -0.042 | 0.028 |

| region_code | 0.0 | 1.0 | -0.054 | 0.1 | -0.22 | -0.22 | -0.1 | -0.037 | 0.0 | -0.49 | -0.28 | 0.083 | 0.46 | 0.39 | -0.06 | 0.06 | 0.0087 | -0.14 | -0.14 | 0.07 |

| ff | -0.069 | -0.054 | 1.0 | -0.11 | 0.042 | 0.095 | 0.076 | 0.14 | -0.066 | 0.14 | 0.0088 | -0.18 | -0.048 | -0.053 | -0.11 | 0.11 | 0.036 | 0.067 | 0.077 | 0.068 |

| t | 0.014 | 0.1 | -0.11 | 1.0 | -0.37 | -0.27 | 0.046 | -0.022 | -0.0012 | -0.058 | -0.034 | -0.013 | 0.065 | 0.042 | -0.0074 | 0.0074 | -0.23 | -0.25 | -0.28 | -0.41 |

| u | -0.027 | -0.22 | 0.042 | -0.37 | 1.0 | 0.47 | 0.033 | 0.24 | -0.038 | 0.13 | 0.056 | 0.056 | -0.14 | -0.074 | 0.066 | -0.066 | 0.13 | 0.14 | 0.16 | 0.19 |

| n | 0.092 | -0.22 | 0.095 | -0.27 | 0.47 | 1.0 | -0.16 | 0.33 | 0.097 | 0.061 | -0.0056 | 0.03 | -0.051 | -0.032 | 0.045 | -0.045 | 0.047 | 0.11 | 0.11 | 0.11 |

| pression | -0.017 | -0.1 | 0.076 | 0.046 | 0.033 | -0.16 | 1.0 | -0.18 | -0.018 | 0.22 | 0.071 | -0.19 | -0.096 | -0.13 | 0.017 | -0.017 | -0.031 | -0.094 | -0.097 | -0.011 |

| precipitation | 0.024 | -0.037 | 0.14 | -0.022 | 0.24 | 0.33 | -0.18 | 1.0 | 0.021 | 0.017 | 0.0017 | 0.0079 | -0.022 | 0.0017 | 0.032 | -0.032 | -0.0071 | -0.004 | -0.007 | -0.016 |

| Year | 0.95 | 0.0 | -0.066 | -0.0012 | -0.038 | 0.097 | -0.018 | 0.021 | 1.0 | -0.13 | -0.34 | -0.23 | 0.34 | 0.21 | -0.022 | 0.022 | -0.11 | 0.0073 | -0.035 | 0.044 |

| 0_19ans | -0.12 | -0.49 | 0.14 | -0.058 | 0.13 | 0.061 | 0.22 | 0.017 | -0.13 | 1.0 | 0.61 | -0.38 | -0.74 | -0.75 | 0.17 | -0.17 | 0.00094 | 0.13 | 0.14 | -0.036 |

| 20_39ans | -0.32 | -0.28 | 0.0088 | -0.034 | 0.056 | -0.0056 | 0.071 | 0.0017 | -0.34 | 0.61 | 1.0 | -0.086 | -0.82 | -0.83 | 0.26 | -0.26 | 0.026 | 0.036 | 0.057 | -0.013 |

| 40_59ans | -0.22 | 0.083 | -0.18 | -0.013 | 0.056 | 0.03 | -0.19 | 0.0079 | -0.23 | -0.38 | -0.086 | 1.0 | 0.088 | 0.19 | 0.12 | -0.12 | 0.086 | -0.09 | -0.086 | -0.025 |

| 60_74ans | 0.32 | 0.46 | -0.048 | 0.065 | -0.14 | -0.051 | -0.096 | -0.022 | 0.34 | -0.74 | -0.82 | 0.088 | 1.0 | 0.79 | -0.2 | 0.2 | -0.02 | -0.096 | -0.12 | 0.038 |

| 75etplus | 0.2 | 0.39 | -0.053 | 0.042 | -0.074 | -0.032 | -0.13 | 0.0017 | 0.21 | -0.75 | -0.83 | 0.19 | 0.79 | 1.0 | -0.28 | 0.28 | -0.0011 | -0.048 | -0.064 | 0.021 |

| Prop_h | -0.021 | -0.06 | -0.11 | -0.0074 | 0.066 | 0.045 | 0.017 | 0.032 | -0.022 | 0.17 | 0.26 | 0.12 | -0.2 | -0.28 | 1.0 | -1.0 | -0.027 | -0.086 | -0.094 | 0.007 |

| Prop_f | 0.021 | 0.06 | 0.11 | 0.0074 | -0.066 | -0.045 | -0.017 | -0.032 | 0.022 | -0.17 | -0.26 | -0.12 | 0.2 | 0.28 | -1.0 | 1.0 | 0.027 | 0.086 | 0.094 | -0.007 |

| reqgoo1 | -0.11 | 0.0087 | 0.036 | -0.23 | 0.13 | 0.047 | -0.031 | -0.0071 | -0.11 | 0.00094 | 0.026 | 0.086 | -0.02 | -0.0011 | -0.027 | 0.027 | 1.0 | 0.66 | 0.57 | 0.32 |

| reqgoo2 | -0.0019 | -0.14 | 0.067 | -0.25 | 0.14 | 0.11 | -0.094 | -0.004 | 0.0073 | 0.13 | 0.036 | -0.09 | -0.096 | -0.048 | -0.086 | 0.086 | 0.66 | 1.0 | 0.89 | 0.3 |

| reqgoo3 | -0.042 | -0.14 | 0.077 | -0.28 | 0.16 | 0.11 | -0.097 | -0.007 | -0.035 | 0.14 | 0.057 | -0.086 | -0.12 | -0.064 | -0.094 | 0.094 | 0.57 | 0.89 | 1.0 | 0.32 |

| TauxGrippe | 0.028 | 0.07 | 0.068 | -0.41 | 0.19 | 0.11 | -0.011 | -0.016 | 0.044 | -0.036 | -0.013 | -0.025 | 0.038 | 0.021 | 0.007 | -0.007 | 0.32 | 0.3 | 0.32 | 1.0 |

Contrairement à la technique precedente, ici nous avons trois nouveaux features qui semblent pertinents pour le modèle. Ces features sont completement differents avec celui detecté par la première méthode. Ainsi, il devient assez difficile de prendre une decision sachant que l’intersection des deux resultats est nulle.

- technique ensembliste

ranks = {}

def ranking(ranks, names, order=1):

ranks = MinMaxScaler().fit_transform(order*np.array([ranks]).T).T[0]

ranks = map(lambda x: round(x,2), ranks)

return dict(zip(names, ranks))

colnames = features.columns

#-- Construct our decision Tree from sklearn.feature_selection import RFE, f_regression from sklearn.tree import DecisionTreeRegressor tree = DecisionTreeRegressor() Dtree = RFE(tree, n_features_to_select=1, verbose =3 ) Dtree.fit(features,target) ranks["Dtree"] = ranking(list(map(float, Dtree.ranking_)), colnames, order=-1)

Fitting estimator with 19 features. Fitting estimator with 18 features. Fitting estimator with 17 features. Fitting estimator with 16 features. Fitting estimator with 15 features. Fitting estimator with 14 features. Fitting estimator with 13 features. Fitting estimator with 12 features. Fitting estimator with 11 features. Fitting estimator with 10 features. Fitting estimator with 9 features. Fitting estimator with 8 features. Fitting estimator with 7 features. Fitting estimator with 6 features. Fitting estimator with 5 features. Fitting estimator with 4 features. Fitting estimator with 3 features. Fitting estimator with 2 features.

#-- Construct our ExtraTreesClassifier from sklearn.ensemble import ExtraTreesRegressor Xtree = ExtraTreesRegressor() EXtree = RFE(Xtree, n_features_to_select=1, verbose =3 ) EXtree.fit(features,target) ranks["EXtree"] = ranking(list(map(float, EXtree.ranking_)), colnames, order=-1)

Fitting estimator with 19 features. Fitting estimator with 18 features. Fitting estimator with 17 features. Fitting estimator with 16 features. Fitting estimator with 15 features. Fitting estimator with 14 features. Fitting estimator with 13 features. Fitting estimator with 12 features. Fitting estimator with 11 features. Fitting estimator with 10 features. Fitting estimator with 9 features. Fitting estimator with 8 features. Fitting estimator with 7 features. Fitting estimator with 6 features. Fitting estimator with 5 features. Fitting estimator with 4 features. Fitting estimator with 3 features. Fitting estimator with 2 features.

#construct our RandomForestClassifier from sklearn.ensemble import RandomForestRegressor RF = RandomForestRegressor() RandF = RFE(RF, n_features_to_select=1, verbose =3 ) RandF.fit(features,target) ranks["RandF"] = ranking(list(map(float, RandF.ranking_)), colnames, order=-1)

Fitting estimator with 19 features. Fitting estimator with 18 features. Fitting estimator with 17 features. Fitting estimator with 16 features. Fitting estimator with 15 features. Fitting estimator with 14 features. Fitting estimator with 13 features. Fitting estimator with 12 features. Fitting estimator with 11 features. Fitting estimator with 10 features. Fitting estimator with 9 features. Fitting estimator with 8 features. Fitting estimator with 7 features. Fitting estimator with 6 features. Fitting estimator with 5 features. Fitting estimator with 4 features. Fitting estimator with 3 features. Fitting estimator with 2 features.

#construct our RandomForestClassifier from sklearn.ensemble import AdaBoostRegressor Adb = AdaBoostRegressor() AdaBoost = RFE(Adb, n_features_to_select=1, verbose =3 ) AdaBoost.fit(features,target) ranks["AdaBoost"] = ranking(list(map(float, AdaBoost.ranking_)), colnames, order=-1)

Fitting estimator with 19 features. Fitting estimator with 18 features. Fitting estimator with 17 features. Fitting estimator with 16 features. Fitting estimator with 15 features. Fitting estimator with 14 features. Fitting estimator with 13 features. Fitting estimator with 12 features. Fitting estimator with 11 features. Fitting estimator with 10 features. Fitting estimator with 9 features. Fitting estimator with 8 features. Fitting estimator with 7 features. Fitting estimator with 6 features. Fitting estimator with 5 features. Fitting estimator with 4 features. Fitting estimator with 3 features. Fitting estimator with 2 features.

#construct our GradientBoostingClassifier from sklearn.ensemble import GradientBoostingRegressor GBT = GradientBoostingRegressor(n_estimators=100, learning_rate=1.0,max_depth=1, random_state=0) GradBoost = RFE(GBT, n_features_to_select=1, verbose =3 ) GradBoost.fit(features,target) ranks["GradBoost"] = ranking(list(map(float, GradBoost.ranking_)), colnames, order=-1)

Fitting estimator with 19 features. Fitting estimator with 18 features. Fitting estimator with 17 features. Fitting estimator with 16 features. Fitting estimator with 15 features. Fitting estimator with 14 features. Fitting estimator with 13 features. Fitting estimator with 12 features. Fitting estimator with 11 features. Fitting estimator with 10 features. Fitting estimator with 9 features. Fitting estimator with 8 features. Fitting estimator with 7 features. Fitting estimator with 6 features. Fitting estimator with 5 features. Fitting estimator with 4 features. Fitting estimator with 3 features. Fitting estimator with 2 features.

# Construct our Linear Regression model lr = LinearRegression(normalize=True) lr.fit(features,target) #stop the search when only the last feature is left LinReg = RFE(lr, n_features_to_select=1, verbose =3 ) LinReg.fit(features,target) ranks["LinReg"] = ranking(list(map(float, LinReg.ranking_)), colnames, order=-1)

Fitting estimator with 19 features. Fitting estimator with 18 features. Fitting estimator with 17 features. Fitting estimator with 16 features. Fitting estimator with 15 features. Fitting estimator with 14 features. Fitting estimator with 13 features. Fitting estimator with 12 features. Fitting estimator with 11 features. Fitting estimator with 10 features. Fitting estimator with 9 features. Fitting estimator with 8 features. Fitting estimator with 7 features. Fitting estimator with 6 features. Fitting estimator with 5 features. Fitting estimator with 4 features. Fitting estimator with 3 features. Fitting estimator with 2 features.

# Using Ridge ridge = Ridge(alpha = 7) ridge.fit(features,target) ranks['Ridge'] = ranking(np.abs(ridge.coef_), colnames) # Using Lasso lasso = Lasso(max_iter=100000,alpha=.05) lasso.fit(features,target) ranks["Lasso"] = ranking(np.abs(lasso.coef_), colnames)

xgb = XGBRegressor() xgb.fit(features,target) ranks["Xgbt"] = ranking(xgb.feature_importances_, colnames)

# Create empty dictionary to store the mean value calculated from all the scores

r = {}

for name in colnames:

r[name] = round(np.mean([ranks[method][name]

for method in ranks.keys()]), 2)

methods = sorted(ranks.keys())

ranks["Mean"] = r

methods.append("Mean")

Ridge=[ranks['Ridge'][name] for name in colnames] Ridge=pd.DataFrame(Ridge,columns=['Ridge']) LinReg=[ranks['LinReg'][name] for name in colnames] LinReg=pd.DataFrame(LinReg,columns=['LinReg']) Xgbt=[ranks['Xgbt'][name] for name in colnames] Xgbt=pd.DataFrame(Xgbt,columns=['Xgbt']) Dtree=[ranks['Dtree'][name] for name in colnames] Dtree=pd.DataFrame(Dtree,columns=['Dtree']) EXtree=[ranks['EXtree'][name] for name in colnames] EXtree=pd.DataFrame(EXtree,columns=['EXtree']) RandF=[ranks['RandF'][name] for name in colnames] RandF=pd.DataFrame(RandF,columns=['RandF']) AdaBoost=[ranks['AdaBoost'][name] for name in colnames] AdaBoost=pd.DataFrame(AdaBoost,columns=['AdaBoost']) GradBoost=[ranks['GradBoost'][name] for name in colnames] GradBoost=pd.DataFrame(GradBoost,columns=['GradBoost']) Mean=[ranks['Mean'][name] for name in colnames] Mean=pd.DataFrame(Mean,columns=['Mean']) cols=pd.DataFrame(colnames,columns=['Features']) ranking_score=pd.concat([cols,Ridge,LinReg,Xgbt,Dtree,EXtree,RandF,AdaBoost,GradBoost,Mean],axis=1) ranking_score.sort_values(by="Mean",ascending=False,inplace=True)

ranking_score

| Features | Ridge | LinReg | Xgbt | Dtree | EXtree | RandF | AdaBoost | GradBoost | Mean | |

|---|---|---|---|---|---|---|---|---|---|---|

| 18 | reqgoo3 | 0.19 | 0.61 | 1.00 | 0.89 | 0.89 | 0.89 | 0.94 | 0.94 | 0.73 |

| 3 | t | 0.03 | 0.56 | 0.12 | 0.94 | 0.94 | 0.94 | 1.00 | 0.89 | 0.61 |

| 0 | week | 0.01 | 0.33 | 0.15 | 1.00 | 1.00 | 1.00 | 0.89 | 1.00 | 0.60 |

| 10 | 20_39ans | 0.37 | 0.94 | 0.04 | 0.39 | 0.33 | 0.39 | 0.56 | 0.56 | 0.50 |

| 16 | reqgoo1 | 0.03 | 0.50 | 0.09 | 0.50 | 0.83 | 0.44 | 0.72 | 0.83 | 0.44 |

| 1 | region_code | 0.00 | 0.17 | 0.07 | 0.83 | 0.61 | 0.78 | 0.67 | 0.78 | 0.43 |

| 9 | 0_19ans | 0.01 | 0.83 | 0.16 | 0.61 | 0.78 | 0.56 | 0.83 | 0.00 | 0.42 |

| 6 | pression | 0.00 | 0.00 | 0.04 | 0.72 | 0.72 | 0.83 | 0.61 | 0.72 | 0.40 |

| 8 | Year | 1.00 | 0.39 | 0.00 | 0.11 | 0.67 | 0.22 | 0.11 | 0.06 | 0.40 |

| 2 | ff | 0.02 | 0.44 | 0.02 | 0.44 | 0.39 | 0.50 | 0.78 | 0.50 | 0.34 |

| 4 | u | 0.01 | 0.22 | 0.02 | 0.78 | 0.44 | 0.72 | 0.06 | 0.67 | 0.33 |

| 13 | 75etplus | 0.22 | 1.00 | 0.08 | 0.22 | 0.17 | 0.28 | 0.50 | 0.28 | 0.31 |

| 5 | n | 0.00 | 0.06 | 0.02 | 0.56 | 0.28 | 0.61 | 0.39 | 0.61 | 0.28 |

| 7 | precipitation | 0.00 | 0.28 | 0.03 | 0.67 | 0.50 | 0.67 | 0.28 | 0.11 | 0.28 |

| 12 | 60_74ans | 0.29 | 0.89 | 0.03 | 0.17 | 0.22 | 0.33 | 0.00 | 0.22 | 0.24 |

| 11 | 40_59ans | 0.06 | 0.78 | 0.02 | 0.28 | 0.11 | 0.11 | 0.44 | 0.17 | 0.22 |

| 17 | reqgoo2 | 0.00 | 0.11 | 0.08 | 0.33 | 0.56 | 0.17 | 0.22 | 0.44 | 0.21 |

| 15 | Prop_f | 0.04 | 0.72 | 0.00 | 0.06 | 0.06 | 0.06 | 0.17 | 0.39 | 0.17 |

| 14 | Prop_h | 0.04 | 0.67 | 0.04 | 0.00 | 0.00 | 0.00 | 0.33 | 0.33 | 0.16 |

list(ranking_score[ranking_score['Mean']>0.5].Features)

['reqgoo3', 't', 'week']

# Put the mean scores into a Pandas dataframe

meanplot = pd.DataFrame(list(r.items()), columns= ['Feature','Mean Ranking'])

# Sort the dataframe

meanplot = meanplot.sort_values('Mean Ranking', ascending=False)

# Let's plot the ranking of the features

import warnings

warnings.filterwarnings("ignore")

plot=sns.factorplot(x="Mean Ranking", y="Feature", data = meanplot, kind="bar",

size=14, aspect=1.9, palette='coolwarm')

Cette dernière technique est en effet une combinaison de plusieurs méthodes permet de donner en moyenne les résulats et de tirer profit d’un grand nombre de méthodes. Elle reste heuristique dans le sens ou vous devez choisir une valeur de seuil mais elle reste quand bien même plus sûr afin d’éviter de supprimer des features pertinents ou d’inclure des features moins importants.